Table of contents

I used Spin to build and deploy a WebAssembly application to Fermyon Cloud, let me explain why

Context

When we think of cloud computing we often think of two ways to deploy applications to the cloud; containers and virtual machines.

Virtual Machines can be likened to houses with yards/plots within an area of land containing several other plots. Each house has its own plumbing and electricity, making it self contained ensuring one house cannot impact the other. This independence comes at a cost. Each VM requires a portion of the physical server's resources to run its own operating system and applications. This leads to inefficiencies in resource usage. You prioritize security trading-off resource efficiency.

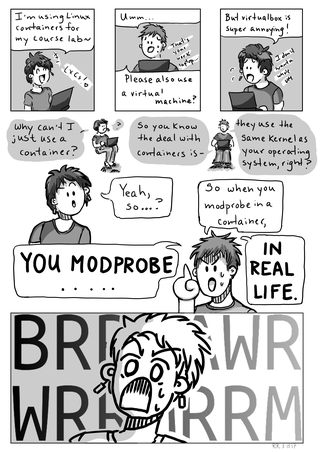

Containers on the other hand are like apartments in an apartment building. They share common infrastructure, namely a single OS kernel, while maintaining isolation at the application level. With this option we prioritize resource efficiency, trading off security.

But why....

Because containers share the host's OS's kernel, if one container is compromised, the entire system could be compromised.

To quote one StackOverFlow answer;

"Ostensibly, a compromised container should not be able to harm the host. However, container security is not great, and there are usually many vulnerabilities that allow a privileged container user to compromise the host. In this way, containers are often less secure than full virtual machines. That does not mean that virtual machines can't be hacked. They are just not quite as bad.

If the kernel is exploited in a virtual machine, the attacker still needs to find a bug in the hypervisor. If the kernel is exploited in a container, the entire system is compromised, including the host. This means that kernel security bugs, as a class, are far more severe when containers are used."

Containers are typically implemented using namespaces. You can think of this as a wrapper providing a layer of isolation for running processes, meaning processes running within a container can only see and interact with resources with the name namespace and remain unaware of processes in other namespaces. Using the apartment analogy, we separate the apartment into rooms. Security risks could be introduced by this. If someone finds a structural defect in the walls separating the rooms, they could potentially move between rooms without going through the door. This could be as a result of flaws or bugs in the namespace implementation. One example is the CVE-2022-0185 exploit.

Why WebAssembly

It's significance is that it is compiled to bytecode, resulting in faster performance by directly using the native code of the target machine (an interpreted language for example would need to be read and executed by another program. Whereas a compiled language is a language where once compiled, it is expressed in the instructions of the target machine).

This is a big advantage for cloud applications, ultimately resulting in smaller more lightweight modules reducing startup times and reducing resource consumption.

Ok, but what about security...

WebAssembly modules execute within a sandboxed environment, meaning the modules in the application run independently and they cannot escape that sandbox without going through a pre-defined API.

What does this mean...

Because of the isolated and sandboxed environment, there is a boundary enforced by the WebAssembly runtime, preventing people from 'moving between rooms through exploitations' in containers. Faults that occur within a WebAssembly module will not propagate to the host system or other processes. This is done while maintaining the portability of containers by abstracting away the OS

Application

With this context in mind I decided to take WebAssembly for a spin. I spun up code to build a Serverless AI application to teach people about WebAssembly using Spin, Fermyon's open-source developer platform for creating serverless functions. Not quite the triple entendre I intended but let's hop into the application.

Building my application

My application is a Rust application that;

reads and renders text from a source

requests user input and returns a response from LLAMA2

To implement this I would need to:

create an html file displaying the text, rendering the input and displaying the output from my server

leverage cloud-gpu to interact with LLAMA2. This is a spin plugin that enables you to use GPUs on Fermyon cloud, allowing you to interact with models like LLAMA 2 without having to download them manually. This process involved initiating and deploying a

cloud-gpu. The initiation process outputs variables I would need to create aruntime-config.tomlthis contains the runtime configuration datacss to style my index.html

use JavaScript to manipulate the DOM and control what the user sees and at what point

use Rust to include my CSS file directly within the binary and control the functionality of the entire program

Manifest File

The Spin manifest file contains a breakdown of what should happen when specific triggers are triggered along with WASM modules and configuration variables.

spin_manifest_version = 2

[application]

name = "profwasm"

version = "0.1.0"

authors = ["Emmanuel Sibanda"]

description = "An AI application to help you learn about WebAssembly"

[[trigger.http]]

route = "/..."

component = "profwasm"

[component.profwasm]

source = "target/wasm32-wasi/release/profwasm.wasm"

allowed_outbound_hosts = []

ai_models = ["llama2-chat"]

key_value_stores = ["default"]

[component.profwasm.build]

command = "cargo build --target wasm32-wasi --release"

watch = ["src/**/*.rs", "Cargo.toml"]

In my scenario I only have two triggers a GET request which displays my webpage with all text to help you learn about WASM and a POST request which sends input data to llama2-chat through the cloud-gpu 'proxy'. I don't have to worry about defining multiple triggers. I am also watching for any changes to my Cargo.tml or any Rust file in src for changes. If there are any changes, Spin will rebuild my WebAssembly application and start my application using the spin watch command.

Implementing Serverless AI

Currently, Spin offers supports two models, Llama2Chat and CodellamaInstruct. I specifically used the infer_with_options method so I could control the max amount of tokens. Primarily because of the nature of the application I am building. A user will be asked to explain their understanding of WASM and receive a response based on this. While I limited the verbosity of the response to a limited degree with the prompt being sent to llama-2, it could still be a lengthy response. According to the implementation, max_tokens are set to 100 by default in InferencingParams.

let infer_result = match infer_with_options(

Llama2Chat,

&prompt,

spin_sdk::llm::InferencingParams {

max_tokens: 256,

..Default::default()

},

) {

Ok(infer_result) => infer_result,

Err(err) => {

return Ok(Response::builder()

.status(500)

.body("Internal Server Error")

.build());

}

};

println!("Llama2 response: {:?}", infer_result);

if infer_result.text.is_empty() {

return Err(anyhow::anyhow!("LLAMA response is empty"));

}

let response_body = infer_result.text;

return Ok(Response::builder()

.status(200)

.header("content-type", "application/json")

.body(response_body)

.build());

}

Deploying the model

Deployment was a two step process. I initially had to, I deployed a cloud-gpu and then deployed my app using the command spin deploy

App - https://profwasm-staajtrl.fermyon.app/

GitHub - https://github.com/EmmS21/profwasm